Creating a robots.txt file can be daunting for many website owners. It’s essential for managing how search engines interact with your site.

A robots. txt file guides search engine bots on which pages to crawl and index. Without it, your site’s SEO might suffer. Fortunately, there are free tools available to generate this file easily. These tools simplify the process, even if you’re not tech-savvy.

They help ensure your site stays optimized for search engines. In this post, we will explore why robots. txt files are crucial and introduce the best free tools to create them. Understanding these tools can save you time and enhance your site’s performance. Let’s dive into the world of free robots. txt generator tools.

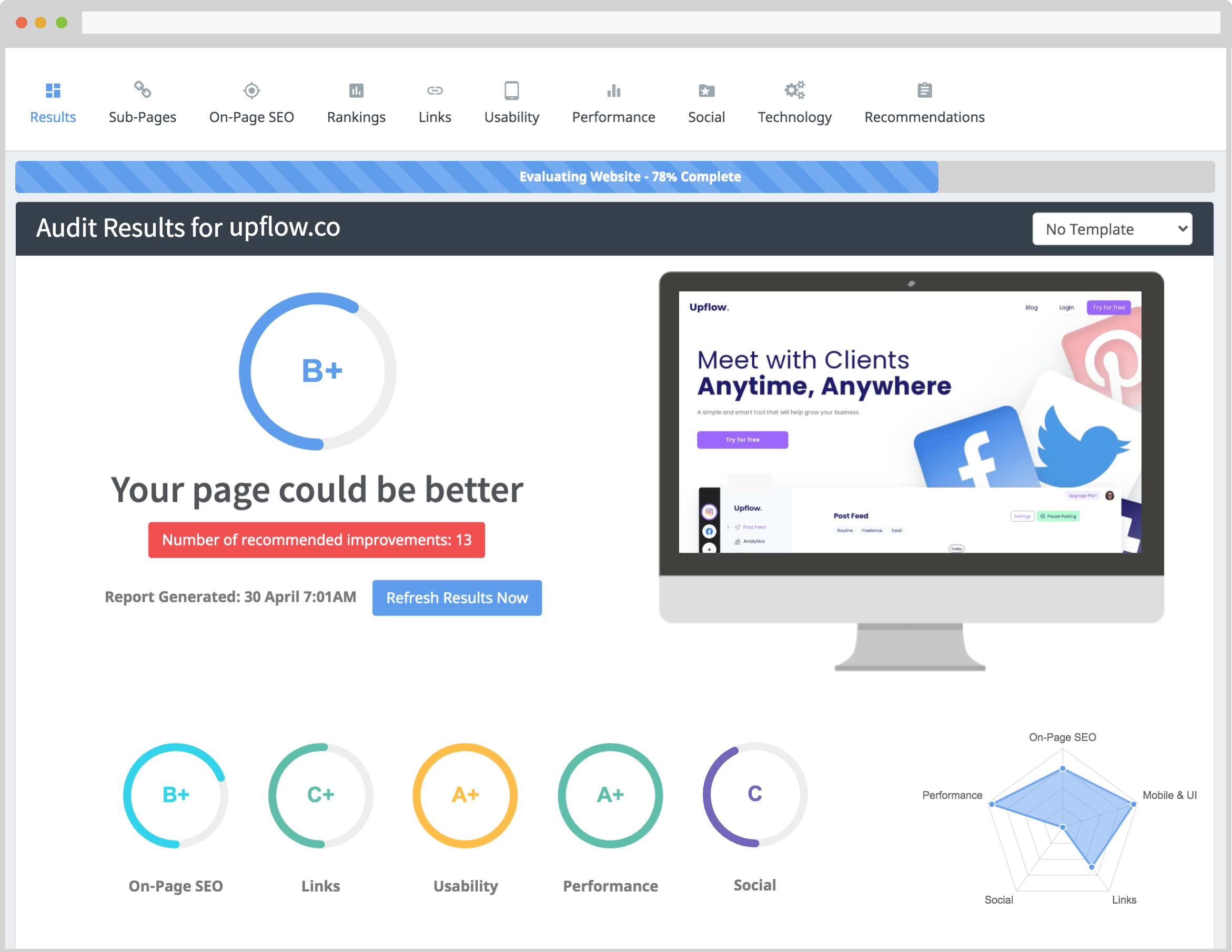

Credit: www.seoptimer.com

Introduction To Robots.txt

The robots.txt file is a small but powerful tool for your website. It guides search engine crawlers on which pages to crawl and index. With the help of a Free Robots.Txt Generator Tool, creating this file becomes easy.

Importance In Seo

The robots.txt file plays a crucial role in SEO. It helps manage how search engines interact with your site. By correctly setting up this file, you can improve your site’s indexing and boost its visibility.

- Control Crawling: You decide which parts of the site search engines can access.

- Save Bandwidth: Prevent search engines from crawling unnecessary pages.

- Improve Ranking: Ensure search engines focus on your most important content.

Basic Structure

The robots.txt file has a simple structure. It consists of rules that direct search engine crawlers.

| Directive | Description | Example |

|---|---|---|

| User-agent | Specifies the crawler | User-agent: |

| Disallow | Blocks access to specified URLs | Disallow: /private/ |

| Allow | Grants access to specified URLs | Allow: /public/ |

Here is a basic example:

User-agent:

Disallow: /private/

Allow: /public/

By understanding these directives, you can create an effective robots.txt file. Use a Free Robots.Txt Generator Tool to simplify this process.

Benefits Of Using Robots.txt Generators

Using Robots.Txt Generators can provide several advantages for website owners. These tools help manage and control web crawlers efficiently. Below are some key benefits:

Time Efficiency

Creating a robots.txt file manually can be time-consuming. Using a generator saves time. You can create a file in minutes. This efficiency allows you to focus on other important tasks.

Error Reduction

Manual coding can lead to errors. Even small mistakes can have big impacts. Robots.Txt Generators minimize these errors. They provide templates and guidelines. This ensures your file is accurate.

Here is a comparison table to highlight the benefits:

| Method | Time Spent | Error Rate |

|---|---|---|

| Manual Creation | Hours | High |

| Using Generators | Minutes | Low |

Using Robots.Txt Generators is not only efficient but also error-free. This makes them an invaluable tool for website owners.

Top Free Robots.txt Generators

Creating a robots.txt file is essential for guiding search engines. It helps in controlling which parts of your site they can crawl. There are many tools available to generate this file. Below are some of the top free robots.txt generators that you can use.

Tool A Overview

Tool A offers a user-friendly interface for creating robots.txt files. It simplifies the process, making it accessible for beginners.

- Easy to use

- Interactive interface

- Supports custom rules

This tool allows you to include or exclude specific parts of your website. It also provides options to block certain user agents. The generated file is ready to be uploaded to your server.

Tool B Overview

Tool B is another excellent option for generating robots.txt files. It focuses on providing more advanced features for experienced users.

| Feature | Description |

|---|---|

| Advanced Options | Includes settings for crawl delays and sitemaps. |

| Custom Rules | Supports specific directives for different bots. |

Tool B also offers a preview feature. This helps you see how your robots.txt file will work before you apply it. This can save you from potential indexing issues.

Credit: www.upgrowth.in

Features To Look For

Choosing the right free robots.txt generator tool can be a game-changer for your website’s SEO. Not all tools are created equal, so knowing what features to look for is crucial. Here are some key features that can help you make an informed decision.

User-friendly Interface

A user-friendly interface is essential. It allows you to generate a robots.txt file with ease. Look for tools that offer a clean and straightforward design. This ensures that even beginners can navigate the tool without any issues.

Icons and buttons should be well-labeled. Instructions should be clear and concise. This makes the process more efficient and less time-consuming.

Customization Options

Customization options are another crucial feature. They allow you to tailor the robots.txt file to meet your specific needs. Look for tools that let you add or remove rules easily. This gives you control over which parts of your site search engines can crawl.

Advanced customization options can include setting crawl delays. Blocking specific user agents or directories is also important. These features give you more flexibility and control over your site’s SEO.

How To Use Robots.txt Generators

Using a robots.txt generator can simplify managing your website’s crawl instructions. These tools help create a file that directs search engines on which pages to crawl or avoid. Here’s how you can use these generators effectively.

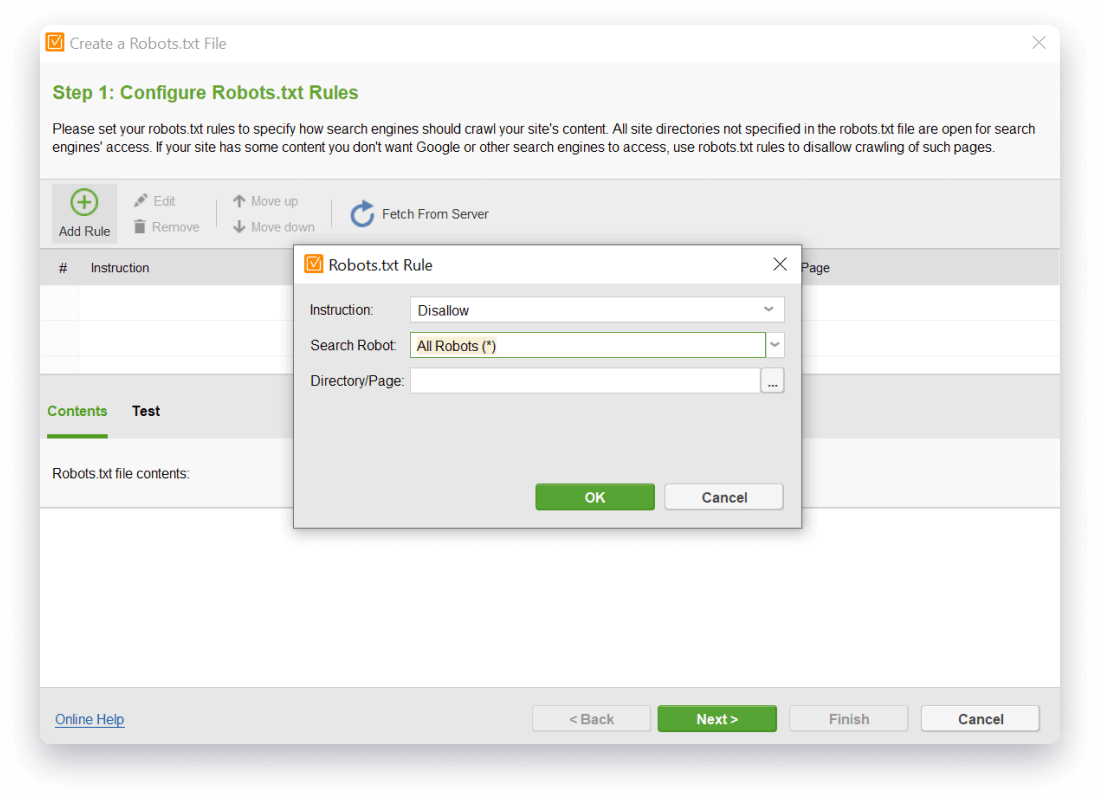

Step-by-step Guide

- Choose a Free Robots.Txt Generator: Many tools are available online. Pick a reliable one.

- Enter Your Website URL: Input the URL of your website. This helps the tool generate the right file.

- Select Crawl Directives: Specify which parts of your site should be crawled or ignored. Common options include:

- Disallow: Prevents search engines from indexing specific pages.

- Allow: Ensures certain pages are indexed, despite broader disallow rules.

- Sitemap: Links to your XML sitemap to help search engines find all pages.

- Generate the File: Click the generate button. The tool will create a robots.txt file based on your inputs.

- Download and Upload: Download the generated file. Upload it to your website’s root directory.

- Test the File: Use Google’s Robots.txt Tester. Ensure it works as expected and does not block important pages.

Common Mistakes To Avoid

- Blocking Essential Pages: Avoid disallowing important pages like your homepage or contact page.

- Forgetting the Sitemap: Always include a link to your XML sitemap. This helps search engines find all your pages.

- Using Incorrect Syntax: Ensure the syntax is correct. Even small errors can cause big issues.

- Overusing Wildcards: Wildcards can be useful but overusing them may block more pages than intended.

- Not Testing the File: Always test the robots.txt file after uploading. This ensures it works correctly.

These tools and tips can help you create an effective robots.txt file. This will improve your site’s visibility and ensure search engines crawl the right pages.

Integrating Robots.txt With Seo Strategy

Integrating a robots.txt file into your SEO strategy can significantly impact your website’s performance. This small file helps search engines understand which pages to crawl and which to skip. By utilizing free robots.txt generator tools, you can ensure that your website is both user-friendly and search engine friendly. Let’s delve into how you can use a robots.txt file to improve your site’s SEO strategy.

Improving Crawl Efficiency

Search engines have a limited crawl budget for every site. This means they can only index a certain number of pages during each visit. A well-optimized robots.txt file helps direct search engines to the most important pages. This ensures that your site’s most valuable content gets indexed quickly. Using free robots.txt generator tools, you can easily create a file that maximizes crawl efficiency.

Blocking Sensitive Content

Not all content on your website should be accessible to everyone. Some pages may contain sensitive information or duplicate content that could harm your SEO. A robots.txt file allows you to block these pages from being indexed by search engines. Free robots.txt generator tools make it simple to specify which parts of your site should remain private. This keeps your sensitive content safe and your SEO intact.

Case Studies

In this section, we will explore real-life examples of websites that have successfully used free Robots.txt generator tools. These case studies will show the benefits and challenges encountered during the implementation process. Learn from their experiences to optimize your own website’s search engine performance.

Successful Implementations

Many websites have seen positive results by using free Robots.txt generator tools. For instance, a popular e-commerce site improved its search engine ranking within a few months. By correctly configuring their Robots.txt file, they were able to block unnecessary pages. This led to better crawl efficiency and higher visibility for important pages.

Another example is a small blog site that struggled with duplicate content issues. After generating a proper Robots.txt file, the blog saw a significant drop in duplicate content penalties. This helped boost their overall site authority and improved their rankings.

Lessons Learned

From these case studies, several key lessons emerge. First, it’s vital to regularly review and update your Robots.txt file. Search engines change their algorithms, and your site’s content evolves. Regular updates ensure continued compliance and optimization.

Secondly, always test your Robots.txt file before implementing it live. Many free tools offer testing features. Use them to avoid blocking important content accidentally. Lastly, monitor your site’s performance post-implementation. Use analytics to track changes in crawl rates and indexing.

These lessons highlight the importance of a well-maintained Robots.txt file. They also demonstrate how free generator tools can simplify the process and lead to successful outcomes.

Credit: www.link-assistant.com

Future Of Robots.txt Generators

The future of robots.txt generators is evolving rapidly. As technology advances, these tools are becoming more efficient and intelligent. The integration of AI and machine learning promises to bring unprecedented automation and precision to SEO strategies. By leveraging these technologies, webmasters can optimize their websites with minimal effort and maximum impact.

Ai And Machine Learning

AI and machine learning are transforming how robots.txt generators work. These technologies can analyze vast amounts of data quickly. They can identify patterns and predict the best configurations for robots.txt files. This helps in enhancing website performance and search engine rankings.

Machine learning algorithms can adapt to changes in search engine algorithms. This ensures that the generated robots.txt files are always up-to-date. AI-powered tools can also provide insights and recommendations based on the latest SEO trends.

Upcoming Trends

Several upcoming trends are shaping the future of robots.txt generators. Here are a few key trends to watch:

- Integration with CMS: Future tools will integrate seamlessly with content management systems. This will streamline the process of generating and updating robots.txt files.

- Real-time Monitoring: Real-time monitoring features will become standard. These will alert webmasters to any issues with their robots.txt files immediately.

- Enhanced User Interfaces: User interfaces will become more intuitive. This will make it easier for non-technical users to create and manage robots.txt files.

These trends indicate that the future of robots.txt generators is bright. The tools are becoming smarter, easier to use, and more effective. This will help webmasters stay ahead in the competitive world of SEO.

Frequently Asked Questions

What Is A Robots.txt File?

A robots. txt file is a text file webmasters create to instruct search engine bots on how to crawl and index pages on their site.

Why Use A Robots.txt Generator?

A robots. txt generator simplifies creating a file, ensuring it is correctly formatted and effective in guiding search engine bots.

How To Generate A Robots.txt File For Free?

Use online tools like “Free Robots. Txt Generator Tools” to easily create a customized robots. txt file for your website.

Can I Block Specific Pages Using Robots.txt?

Yes, you can block specific pages or directories by listing them in the robots. txt file with the “Disallow” directive.

Conclusion

Free robots. txt generator tools simplify website management. They save time and effort. These tools ensure search engines crawl your site correctly. Easy to use and effective, they help improve SEO. Many options are available online. Choose one that suits your needs best.

Start using a robots. txt generator today. Enhance your site’s performance effortlessly.